After some thought, I feel compelled to expand a bit on my post regarding the US National Ranking List (NRL). Actually, my thoughts on the subject apply to points lists and athlete ranking in XC skiing more generally.

Ranking athletes via some numerical system can be both fun and necessary. It’s fun for skiing fans like myself who enjoy numbers and working with data. It’s sometimes necessary as a tool to inject some objectivity into distinguishing between high and low performing skiers. This tool can be used for many ends: seeding racers for start lists, selecting athletes for national teams, selecting athletes to participate in major events, identifying promising young athletes, etc.

The US NRL recently came in for some (to my ears, mild) criticism. Specifically, since it is based on an athlete’s best four races over the previous 12 months, it is possible for skiers to remain ranked very high even though their performance this season has been underwhelming. The NRL has been a subject of dispute in the past, and I’m sure it will be criticized again no matter how it may be altered or improved upon.

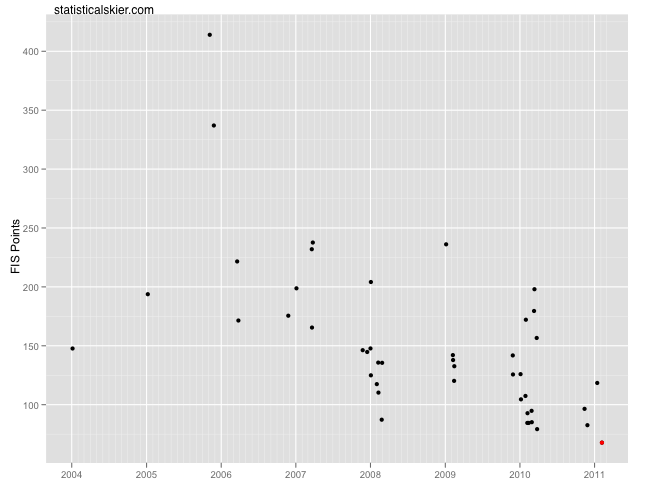

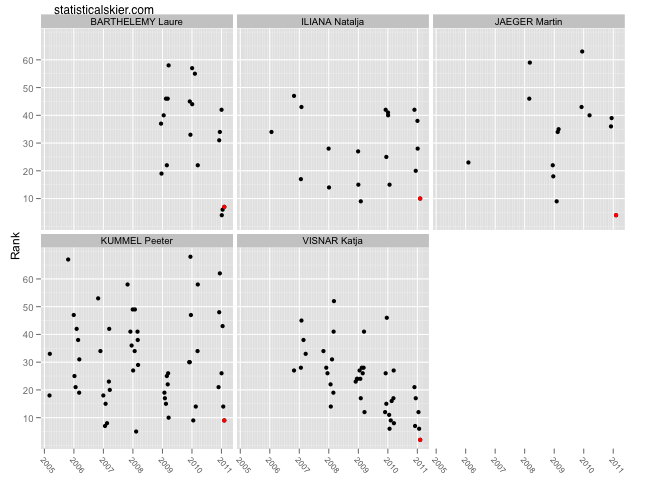

The specific “problem” in this case is rooted in the fact that ranking athletes by the average of their best four (or in FIS’s case, five) races over an entire calendar year makes it easy to ascend the ranking list rather quickly, but coming back down happens very slowly as races move outside the one year window. Two excellent races can rocket you up the rankings, but then they stick around for an entire year no matter how badly you ski from that point on.

There are tons of (fairly simple) ways to fix this issue, but I’d rather people focus on a slightly different topic than the technicalities of points and ranking lists. Instead, I’d like to discuss what it means to use data to help one make a decision and how this pertains to using data in the world of XC skiing.

There is an unfortunate tendency for people to expect data to literally tell them the answer to a question, unequivocally. I think people have a mental model of science that goes something like this:

- Scientist asks question.

- Scientist collects data.

- Data tells scientist what the answer to her question is.

While not entirely false, this is badly misleading. The truth is something closer to this:

- Scientist asks question.

- Scientist collects data.

- Scientist uses her judgement on how to model the data.

- Scientist uses her judgement on how to interpret the results of their model.

- Scientist uses her judgement on how well (if at all) any of this answers their original question.

Obviously, I’m being very simplistic here to make a point. To be sure, data can be an enormous help in answering questions. But it does not replace human judgement, it augments it.

How does this relate to the NRL? When I hear cries for more purely “objective” criteria in XC skiing, I often get the sense that people want the data to make decisions for them. I think this is both lazy and dangerous. No ranking list, no matter how well designed, will perfectly capture the notion of skiing ability.

Even so, the NRL isn’t “wrong”. It’s doing exactly what it was designed to do: rank people based on the average of their best four races over the past year. The “wrongness” stems from the perceived disconnect between the ranking list and what we want the list to tell us. It would be more productive, I think, for people to think about what it is you want the NRL to measure.

It’s unreasonable to expect the NRL, as currently implemented, to reliably identify skiers who are performing the best right now. There will surely be some significant overlap between skiers ranked well on the NRL and skiers racing well right now, but we shouldn’t necessarily expect this given how the NRL and similar tools are designed. Also, despite our fondest wishes, it’s a stretch to expect these lists to accurately predict who will ski the fastest in the future. Generally speaking the person ranked 5th will usually ski faster than the person ranked 50th over the next few races, but how meaningful are the differences between 5th and 6th? 5th and 8th?

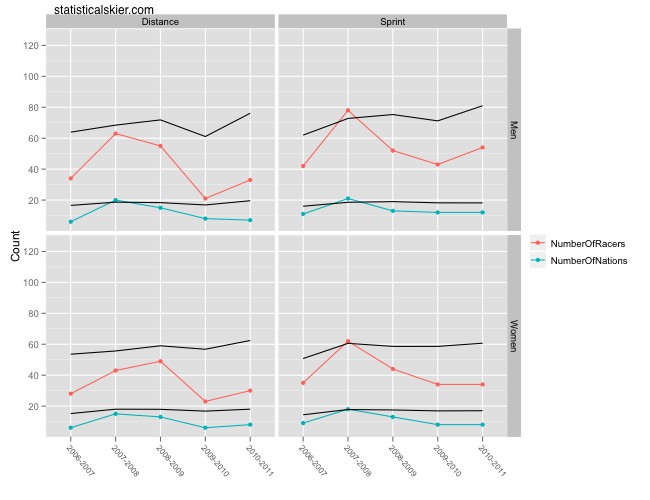

The former problem is easily addressed from a technical standpoint by simply looking at races over a shorter time window, or down-weighting older races. You needn’t alter the NRL itself if all you want is a tool for athlete team selection. [1. Using shorter time windows involve a sticky trade-off in that it then becomes crucial for athletes to attend every top NRL race. Every athlete would need to get four distance and four sprint races by early January. If everything goes well, this may not be a problem. But weather can interfere causing races to be canceled, so it would be risky to skip any. There were essentially 7 distance races and 6 sprints available to domestic skiers this season through US Nationals (although two of those “sprints” were only qualifiers). That doesn’t leave a lot of margin for error.]

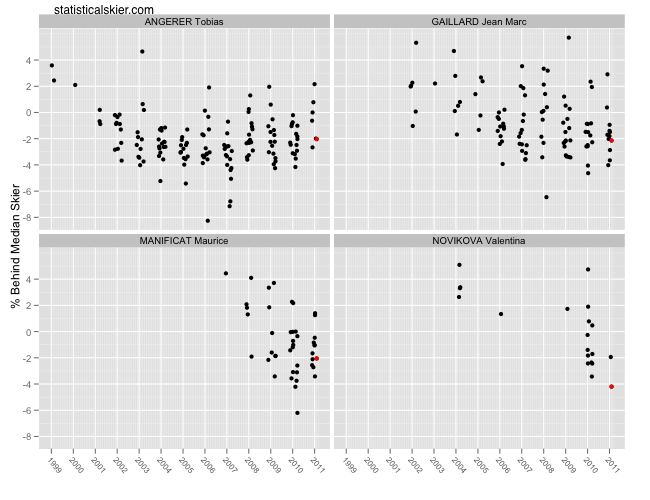

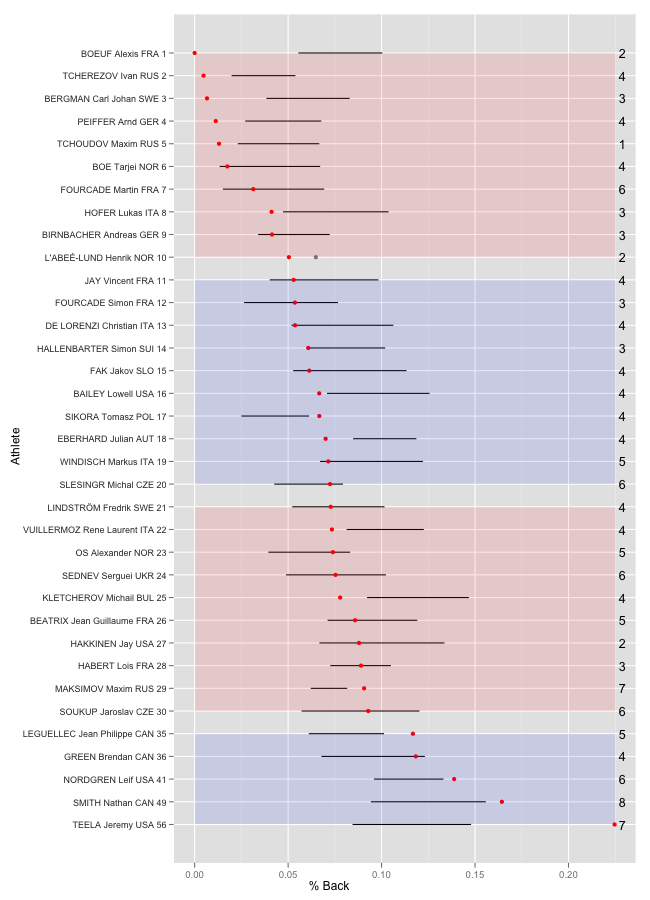

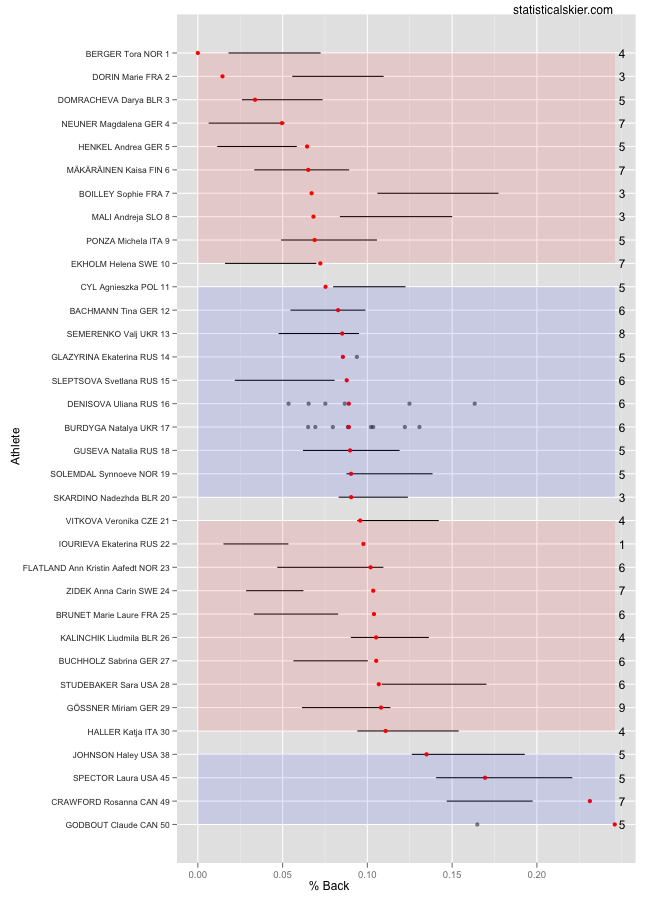

The latter problem is just inherent to the sport. The entire reason I include error bars in my athlete rankings for World Cup skiers is to point out the precision with which averaging each skier’s best N races can really achieve (not as much as we’d like). There isn’t really a ‘solution’; ski racing is variable, so predicting who’s going to ski fast next month (or next week, or next year) will always be a sizable gamble. No amount of statistical trickery will fix that.

I draw two conclusions from this. First, attempts to design a perfectly objective team selection criteria using point systems and ranking lists will fail and will fail spectacularly. If you show me the most advanced, sophisticated ranking system in existence (e.g. ELO) I’ll show you ways in which it could potentially fail and probably does in practice. Ranking lists should be a guide for subjective, human-based decision making, not a rulebook for an automaton. The goal should be to design a system that better augments human judgement, not one that is intended to completely supplant it.

Second, the flaws in the points lists and ranking systems we do use should be evaluated in terms of how likely they are to lead us to make wrong decisions. For example, the NRL was probably “wrong” to rank Caitlin Compton 3rd, for the purposes of selecting a World Championships team. Ultimately, though, she wasn’t selected for the team, so I’m left wondering what the big deal is. If we can identify and fix a perceived flaw in the NRL, fine. But perhaps we should only flip out about it to the extent that it leads to poor decisions. [2.Obviously, this last point depends on what we’re using the list for. We can’t have race organizers using their “discretion” to seed athletes differently if they think the current ranking list is wrong.]

Going forward, it seems most productive to me to (a) decide what you want to measure, as precisely as you can, (b) develop the best method you can for measuring that quantity and then (c) stipulate that it will be used only as a guide for decision making, not followed to the letter.

In that light, the process that John Farra described in the above linked FasterSkier article seems rather sensible to me. I was particularly struck by his mentioning that they started with the specific events at WSC and worked backwards from there. One area of improvement might be to look at better ways of organizing, displaying and analyzing data that highlight specific strengths/weaknesses in particular techniques or events, since the NRL currently doesn’t help much with this. But generally, I applaud the basic philosophy: data as tool and guide, not as a rulebook.

Tagged commentary, editorial, NRL, points, ranking list